Source: Chat With Your Enterprise Data Through Open-Source AI-Q NVIDIA Blueprint | NVIDIA Technical Blog.

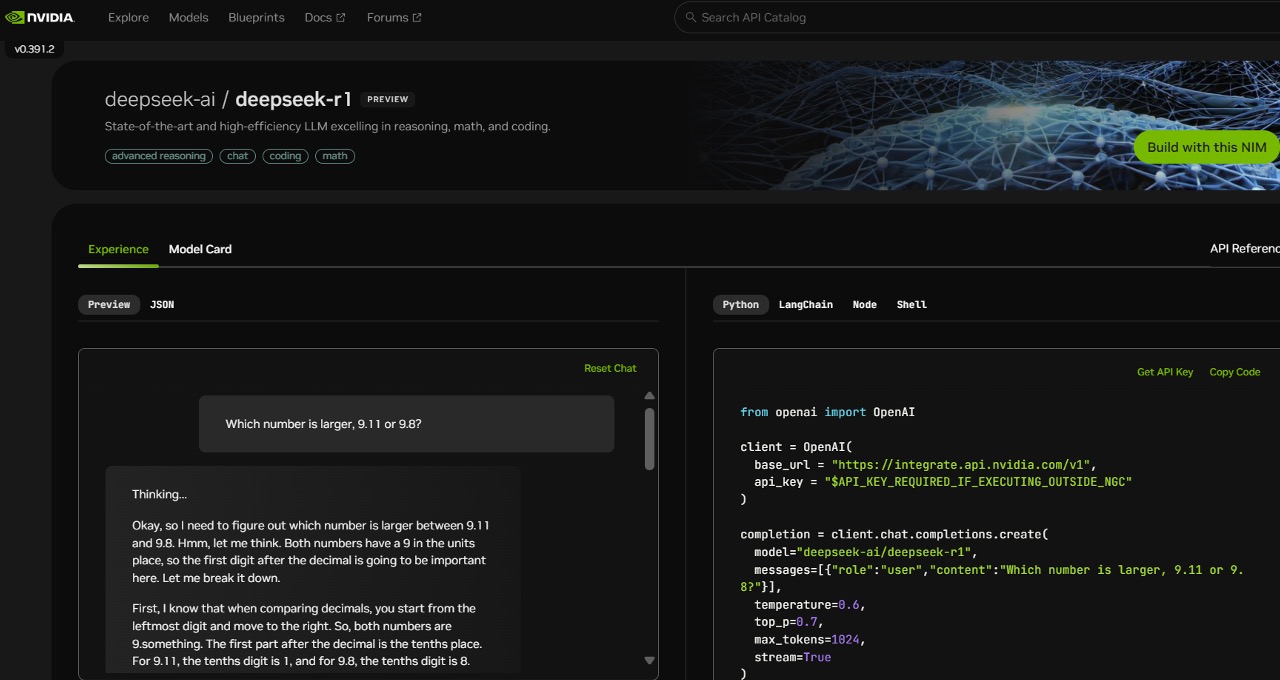

NVIDIA has released AI‑Q, a free, open‑source NVIDIA Blueprint that simplifies building enterprise AI agents capable of conversing with internal data securely and at scale. Designed for companies drowning in unstructured information (Gartner estimates that up to 68 % goes unused), AI‑Q agents can reason across diverse data sources—such as PDFs, emails, databases, chat logs, images, and tables—and provide fast, accurate answers via semantic search, Retrieval‑Augmented Generation (RAG), and live web search integrations (e.g. Tavily).

The blueprint comprises three core components:

- NVIDIA NIM microservices for high‑performance model inference,

- NVIDIA NeMo Retriever microservices for fast multimodal ingestion, embedding, and reranking,

- NeMo Agent toolkit – open-source library that provides framework-agnostic profiling and optimization for production AI agent systems.

In sum, AI‑Q offers enterprises a scalable, secure, and customizable reference implementation to unlock the value of their data using agentic AI.

Read more on Chat With Your Enterprise Data Through Open-Source AI-Q NVIDIA Blueprint | NVIDIA Technical Blog.