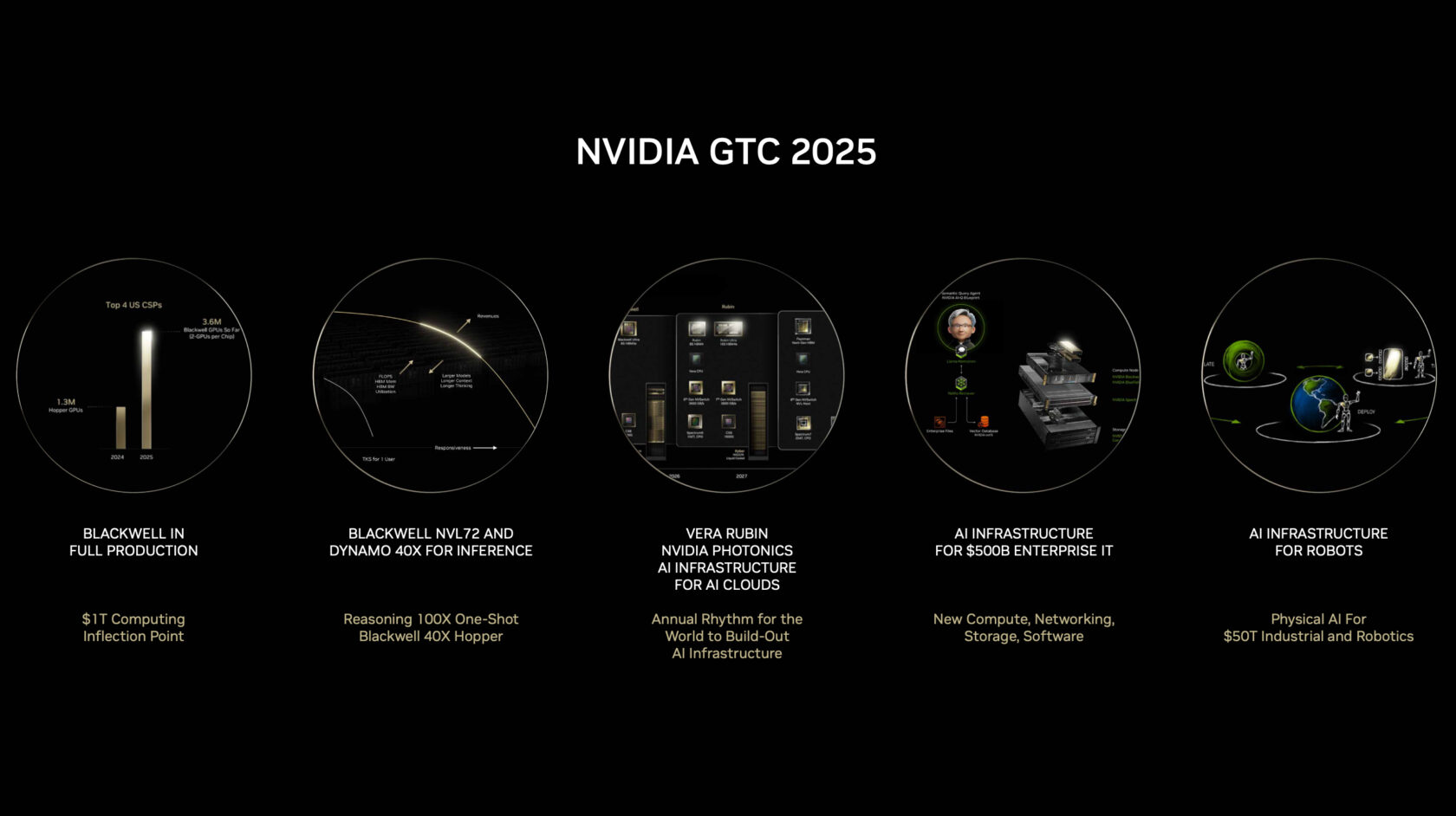

At the end of 2025, NVIDIA reaffirmed its position as the leading company in artificial intelligence and high-performance computing. The company recorded record revenues, driven primarily by exceptional demand for GPUs used in data centers, cloud services, and AI models. Growth was fueled by the global expansion of generative artificial intelligence and large language models.

Key developments at the end of 2025 included:

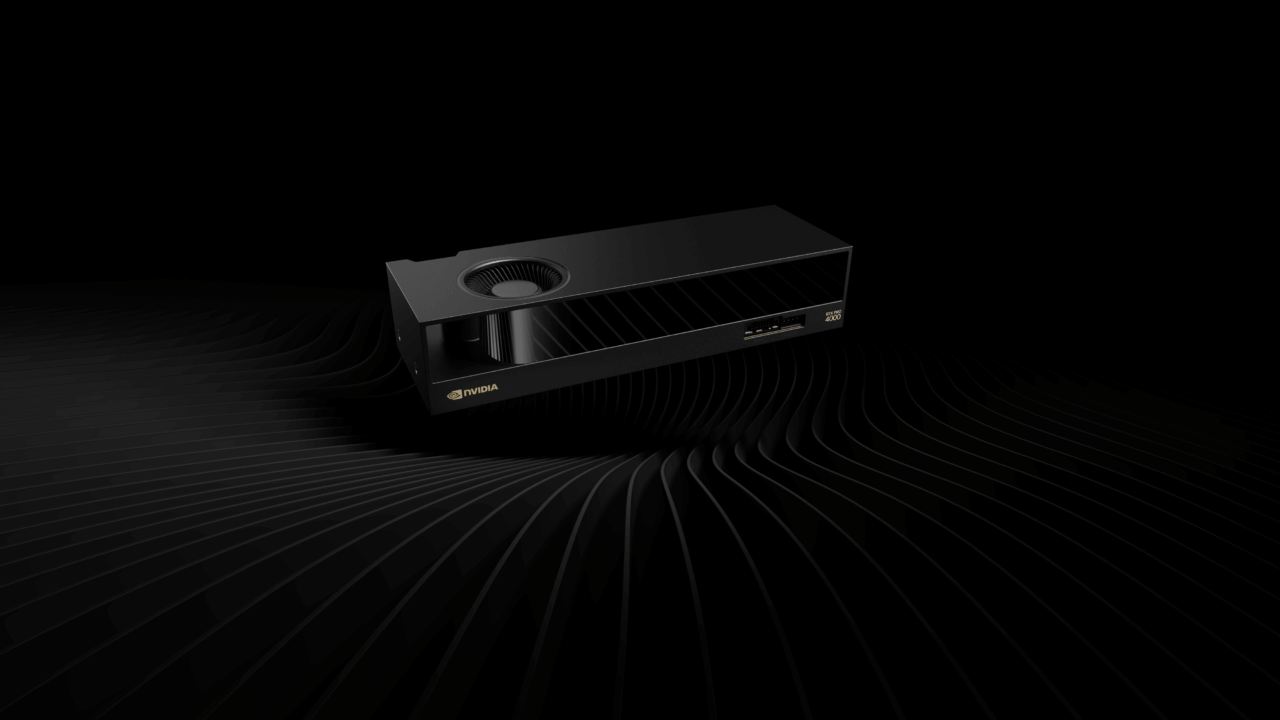

• Strong growth in data centers: AI infrastructure became the company’s main revenue source.

• Advancements in graphics and gaming: NVIDIA introduced DLSS 4.5, which uses AI to generate multiple frames and deliver significantly improved image quality.

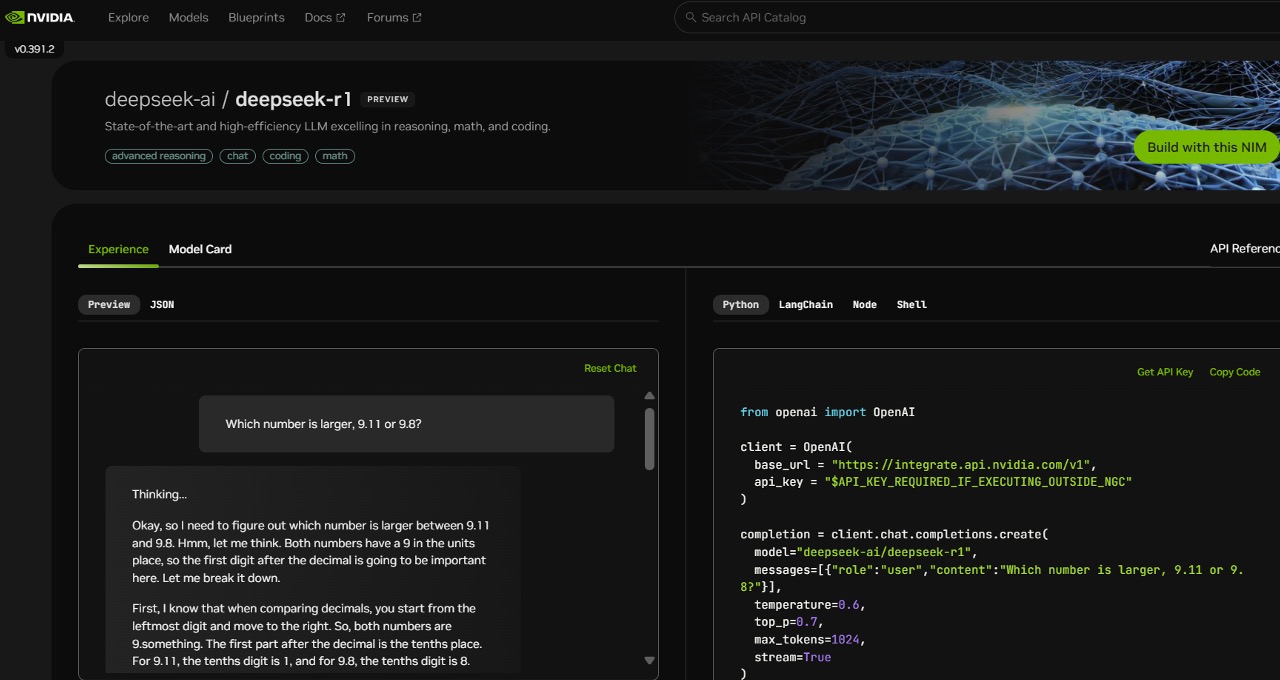

• Strategic partnerships: The company strengthened collaborations with major technology companies and cloud service providers.

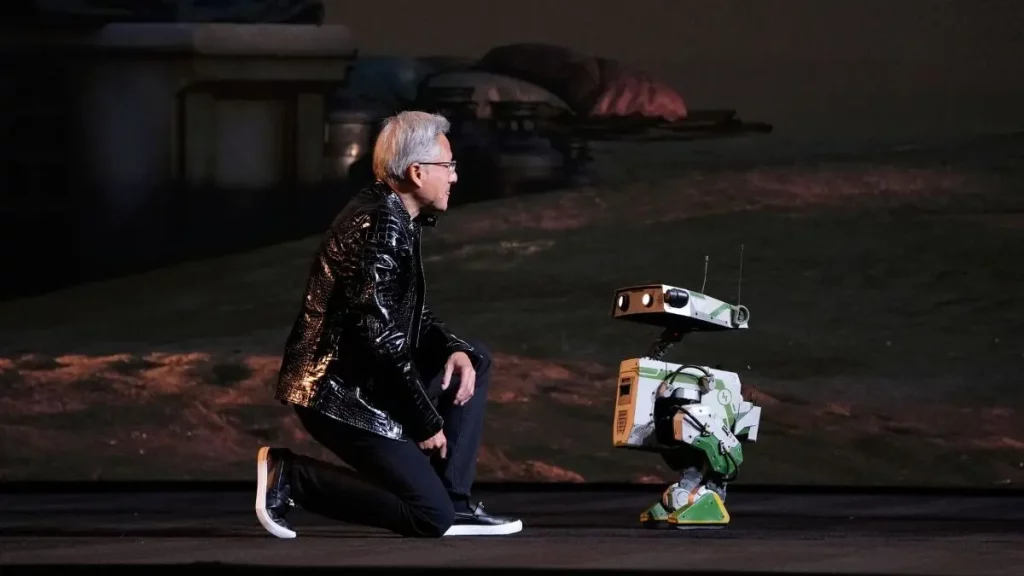

At CES 2026, NVIDIA unveiled its new AI platform, Vera Rubin, which delivers significantly higher performance for training and running advanced AI models. This platform is designed as the foundation for the next generation of data centers and industrial AI solutions. In addition, NVIDIA is increasingly emphasizing the concept of “physical AI,” where artificial intelligence is used in robotics, autonomous driving, industrial automation, and smart factories.

In 2026, continued growth in demand for AI chips is expected, alongside broader adoption of AI in industry and real-world applications, as well as increased competition and regulatory challenges—particularly regarding chip export controls.

NVIDIA enters 2026 in an exceptionally strong position: with a new generation of AI platforms, advanced graphics technologies, and a clear growth strategy. If the company successfully manages competitive pressures and regulatory constraints, it is likely to remain one of the key players in global technological development for years to come.