This year, Xenya participated in the traditional INFOSEK conference, organized for the 23rd time in a row by Palsit in Nova Gorica. The event, titled Information Security, once more offered a wealth of insightful content and a diverse range of exhibitors.

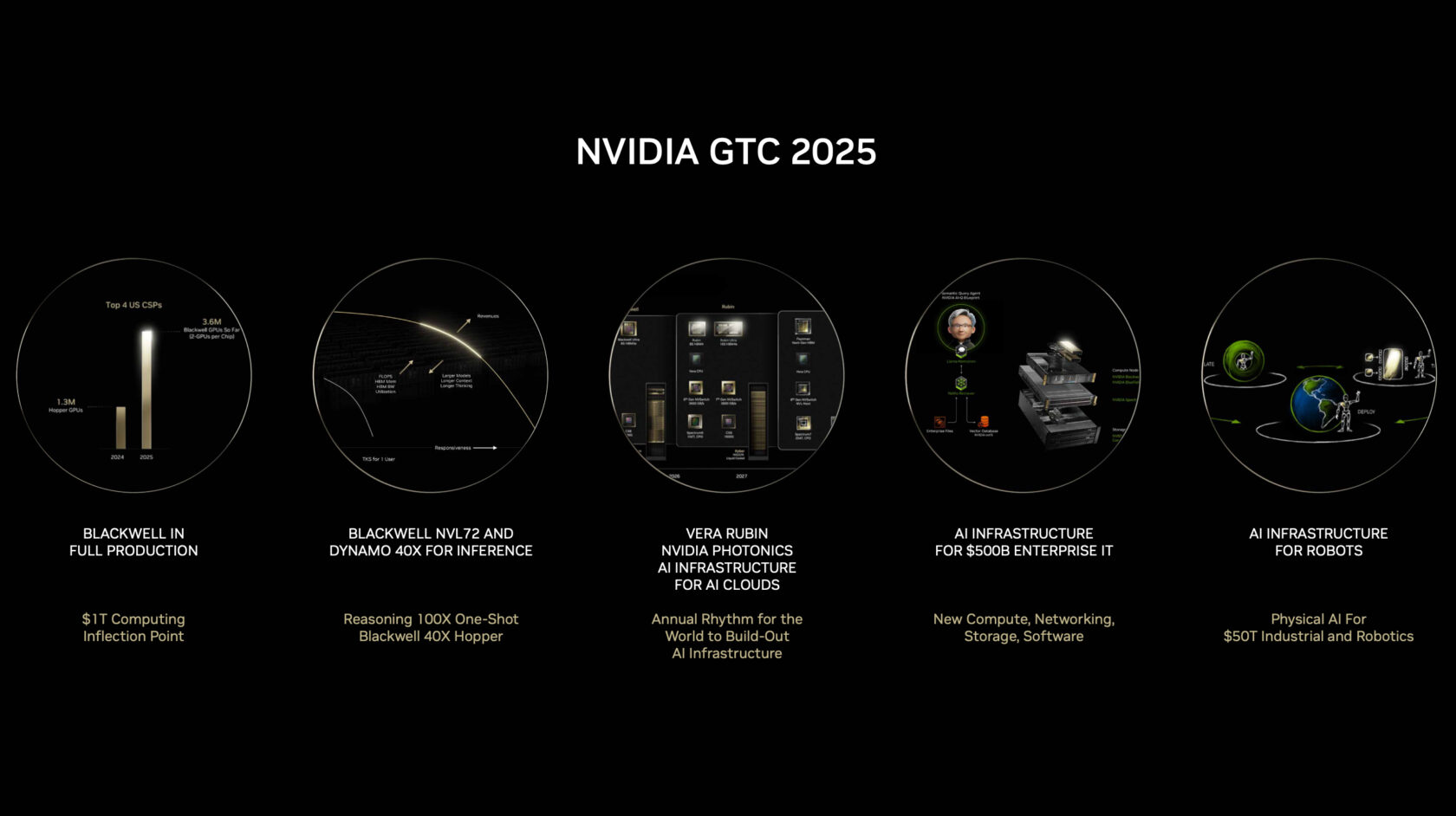

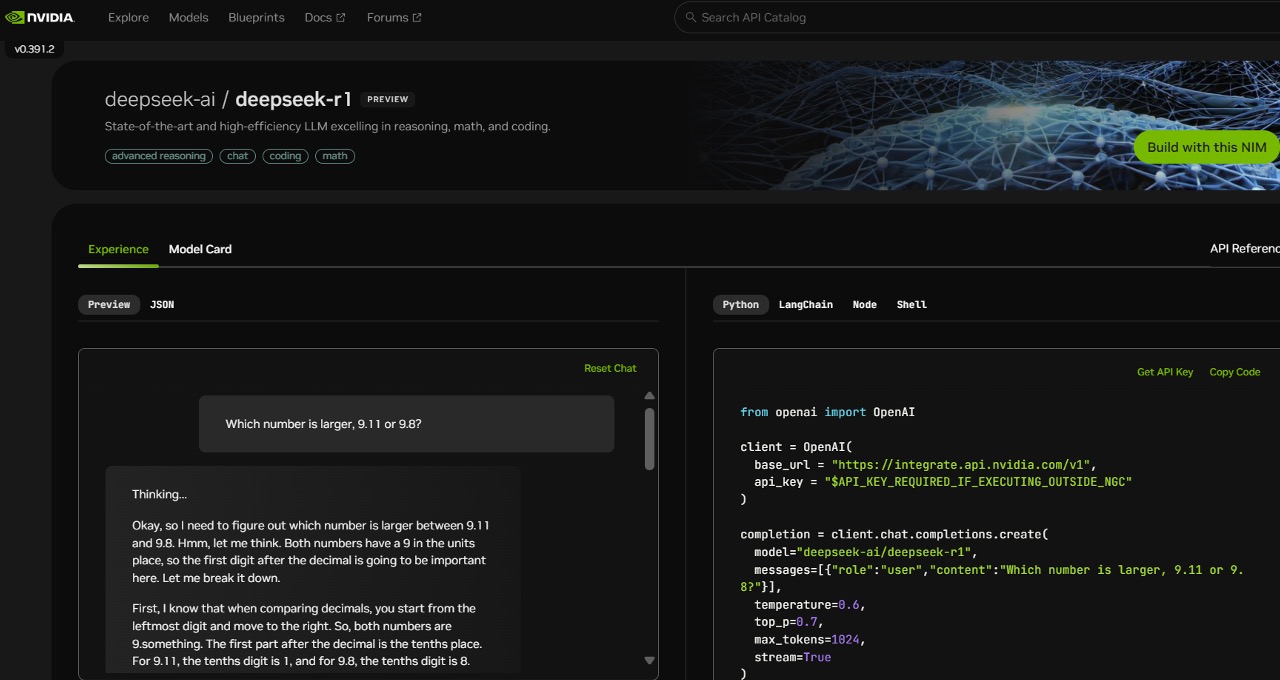

In an era where artificial intelligence and rapid technological advancements continue to raise new questions, even the leading IT experts find it difficult to predict the future. This is why preventive security is of crucial importance – protection, caution, and continuous education are the foundations of a safe digital future.

In addition to the professional program, the conference also provided opportunities for relaxed socializing. Three days of intensive lectures and discussions were complemented by evening events, which allowed participants to network and exchange experiences in a more informal setting. By taking part in the INFOSEK conference, Xenya reaffirms its commitment to excellence and responsibility in the field of information security, while emphasizing the importance of continuous knowledge development and a preventive approach to safeguarding information systems.